On automation, amnesia and events

Last month I wired up an LLM module that screens job applicants. It works well. Much better than me, actually. Processing real documents, catching real issues. I sipped my coffee and considered updating my LinkedIn bio to AI Visionary.

This feeling lasted about forty-five minutes. Then someone on the ops team asked why an applicant was stuck in the pipeline for two weeks. Turns out, our beautiful automation had the long-term memory of Guy Pearce in Memento.

I've been building these modules for a couple of years now. Each one works. But I keep hitting a wall when I try to connect them end-to-end. The wall is organisational, not technical.

The ceiling on your enterprise agent strategy isn't model capability. It's whether your organisation has agreed on how to remember things.

Automated silos are still silos

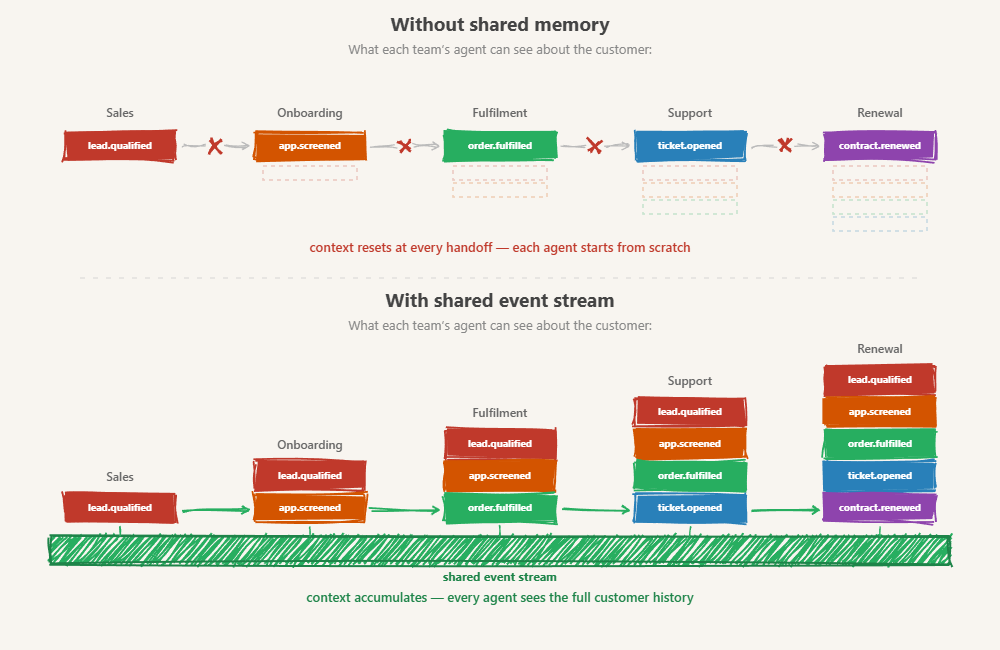

Every division is automating its own piece. One team automates lead qualification. Another automates onboarding. Another automates fulfilment. Another automates support. Each builds its own agent coalition, and each coalition handles its domain just fine. All the respective product managers grin and write "AI agents shipped, cut costs by 95%" on their CVs.

But a customer doesn't live inside one division.

They flow through the whole business. Discovered, evaluated, signed, onboarded, served, supported, renewed. The value is in the coherence of that end-to-end journey, not in any single team's local win.

When a customer crosses from one team's domain to the next, the receiving agents have no idea what was flagged, waived, or decided upstream. Five teams with five agent coalitions, collectively dumber than one ops person who had access to all the spreadsheets.

Say an agent needs to handle a support escalation. It has to rummage through the sales CRM, the risk database, the onboarding system, the product database, the billing platform. Different schemas. Different IDs for the same customer. A jigsaw puzzle where the pieces are spread across five rooms.

Now picture the alternative: a clean event stream. One chronological story. Signed up, evaluated, approved, onboarded, activated, first issue, stuck 14 days. The agent spends its intelligence on solving the problem instead of assembling it from scratch.

The difference isn't just architectural. State tells you the patient is dead. Events tell you why.

"Just give agents database access"

A dev friend pushed back on this. If agents need context, give them database access. Let them query what they need. Models are smart enough.

Half right. For investigation, figuring out why something went wrong after the fact, database access works. An agent can query five systems, reconstruct the timeline, give you an answer. Slow, expensive, might hallucinate some joins (might be a skill issue), but it gets you there.

Operational coordination is a different animal. Investigation is forensic. Coordination is live. When your risk team's agents flag a pattern in incoming applications, the onboarding team doesn't need to investigate that three weeks later. It needs to know now. Database access gives you archaeology. Events give you a nervous system.

Related argument: context windows are big enough, just dump everything in. This doesn't work either. Context windows are session-level, not long-term memory. And models reliably perform worse on operational tasks when you dump everything in than when you curate what matters. Total recall isn't the goal. The right memory at the right time is. The answer to "agents can't remember" is not "give them more to read."

Three memory problems are emerging:

- What happened in this conversation (session memory)

- What this agent knows across conversations (agent memory)

- What all agents across the business collectively know (organisational memory)

The industry is making progress on the first two. Mem0, Letta, various retrieval architectures. The third, organisational memory, is largely unsolved (OpenAI is making strides here, and we'll see more of this in 2026). MCP is moving toward stateless-by-default in its next spec revision. A2A solves agent discovery and coordination but deliberately punts on persistent shared memory. The standardisation efforts are leaving organisational memory to you.

Why nobody does it (incentives, obviously)

You can't ask teams to plan everything with dependencies in mind. Velocity dies. Engineering leaders know this debt is piling up. They ship anyway, because incentives reward shipping. Integration is a problem for later.

Everyone rationally chooses velocity over cross-team coherence. The cost is invisible until it isn't. Until what should take a sprint takes a month. At current speeds, read that as: what should take a day takes a weekend.

And most enterprises think they already have the answer. Kafka clusters, EventBridge, Pub/Sub. The plumbing exists. But the gap isn't plumbing. It's semantics. A Kafka topic carrying database change events is not a curated business event stream that an agent can consume without a schema doc and a prayer. The new requirement is events designed for agent consumption: entity-centric, temporally ordered, semantically self-describing. Nobody owns that yet.

MIT found that 95% of enterprise gen-AI pilots fail to deliver measurable business impact. Not because the models are bad. Because integration, data access, and cross-functional governance are organisational nightmares that nobody budgeted for. The demo works in a week. The enterprise deployment takes a year.

I've seen teams build elaborate data pipelines to reconstruct information that should have been published as it happened. I've seen integrations held together by credential-locked spreadsheets processed by macros (God help us). They're solving real problems with whatever they've got. But each workaround is a system trying to remember things it was never designed to record.

What it looks like

Picture this: support agents notice a spike in complaints from a specific customer segment. They all went through an expedited onboarding flow that skipped a validation step. That signal flows to the onboarding team, which fixes the gap. Sales sees the change and adjusts what they promise. The loop closes across four teams without anyone calling a meeting.

The mechanism is straightforward. Each team publishes what actually happens, a document verified, a status change, a score assigned, as events to a shared bus with a common entity schema. Team A ships an automated screening module and publishes application.screened. Team B is building quality monitoring. Without shared events, Team B negotiates database access, builds a custom integration, or requests a CSV export. With shared events, they subscribe. No meetings. No week-long integration project.

Concretely: when the screening module finishes processing an applicant, it publishes an event like {"type": "application.screened", "entity": "applicant-4821", "result": "flagged", "reason": "missing_certification", "timestamp": "..."} to the shared bus. The quality monitoring module doesn't need to negotiate database access or parse a CSV. It subscribes to application.screened events, gets the payload in real time, and acts on it. No meetings. No integration project. The schema is the contract.

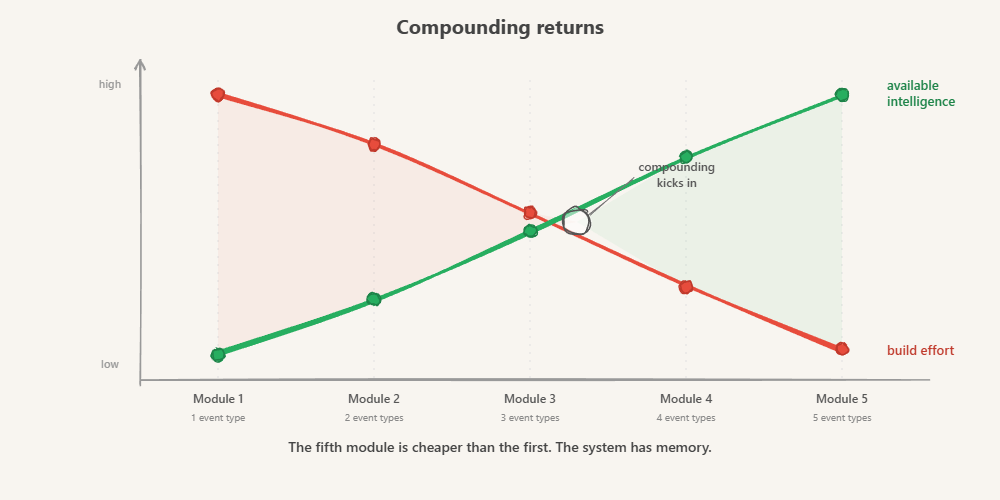

The fifth module ends up cheaper to build than the first, because the infrastructure is there and the system has memory. That's compounding, both cost and intelligence. Each new module makes the whole system smarter because it can see what every other module did.

This is the kicker for most org heads and platform leaders. Coordinating divisions used to take cadres of middle management. People in meetings. People reading dashboards. People walking over to ask questions. Slow, but effective.

Those humans are now being replaced by agents that can't walk over and ask.

The informal coordination layer that people provided for free is disappearing. Shared memory replaces the hallway conversation.

If you've been around long enough, this sounds familiar. Enterprise service buses promised exactly this in the 2000s and collapsed under their own weight. The difference: ESBs tried to be the orchestration layer, heavy middleware that owned the business logic and required consensus on everything. A shared event stream is dumber than that. It's a log. Teams publish what happened, other teams subscribe to what they care about. No central orchestrator deciding who gets what. If you've tried event sourcing as an internal service pattern and found it painful, different scope. This is cross-team, entity-centric, designed for agent consumption, not for rebuilding application state.

And if you're wondering where to start when you have two hundred services and none of them publish business events: pick one handoff. The ugliest one. The one where context dies every time a customer crosses from one team to another. Prove the value there. Expand.

Memory

Borges wrote about a man who remembers everything. Every leaf, every sensation. He is paralysed by it. Total recall isn't the answer either. Shared memory is: curated, structured, available when it matters.

If you're deploying agents across business lines without that, you will hit this wall. Your individual modules will work. Your connected pipeline won't. And the root cause will be amnesia your agents inherited from an organisation that never learned to publish what it knows.

Everything interesting starts with memory.